Time to end secret data laboratories—starting with the CDC

The American people are waking up to the fact that too many public health leaders have not always been straight with them. Despite housing treasure…

Thought Leader: Marty Makary

This piece is by WWSG exclusive thought leader, Sara Fischer.

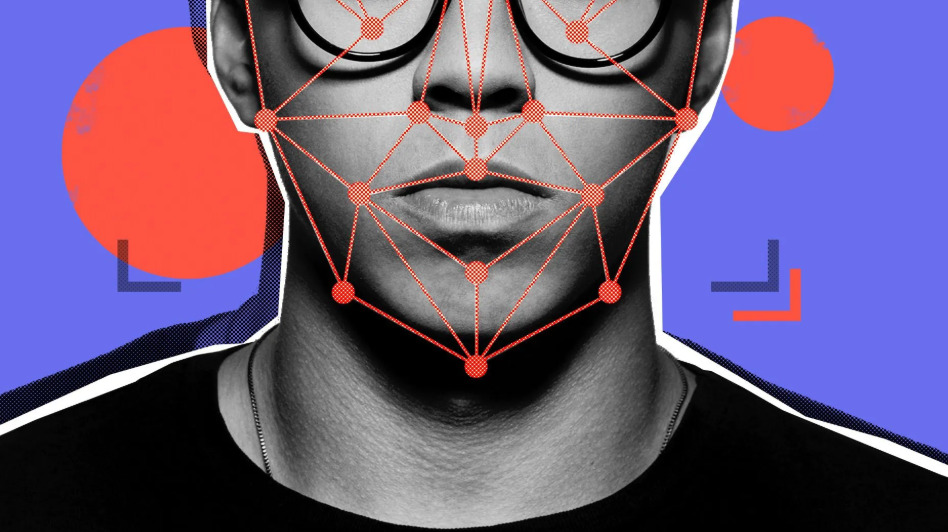

AI-driven deepfakes weren’t the disinformation catastrophe that tech companies and global governments feared ahead of a slew of major elections this year, Meta president of global affairs Nick Clegg told reporters Monday.

Why it matters: The spread of broader conspiracy theories has proven to be a much more challenging misinformation threat than AI-doctored photos or videos.

By the numbers: While Meta said its systems did catch several covert attempts to spread election disinformation using deepfakes, “the volumes remained low and our existing policies and processes proved sufficient to reduce the risk around generative AI content,” the company said.

State of play: Meta introduced new policies this year to prevent everyday users from inadvertently spreading election misinformation using its Meta AI chatbot, including blocking the creation of AI-generated media of politicians.

Zoom out: Meta has invested heavily in broader threat intelligence over the past few years, which Clegg said has helped the company identify coordinated disinformation networks, regardless of whether they use AI or not.

The big picture: Nearly half of the world’s population lives in countries that held major elections this year, prompting concerns about AI deepfakes from intelligence officials globally.

Reality check: Images and videos created using generative AI still lack precision and that makes it possible, at least for now, for experts to debunk them.

The bottom line: The most problematic deepfakes aren’t necessarily the most believable ones, but rather, the ones shared by people in power to help propel narratives or conspiracies that support their campaigns.

Time to end secret data laboratories—starting with the CDC

The American people are waking up to the fact that too many public health leaders have not always been straight with them. Despite housing treasure…

Thought Leader: Marty Makary

Ian Bremmer: Trump, Biden & the US election: What could be next?

On GZERO World, Ian Bremmer reflects on this pivotal week in US politics along with media journalist and former CNN show host Brian Stelter and…

Thought Leader: Ian Bremmer

Ian Bremmer: Democrats now have a fighting chance in elections

Ian Bremmer, founder and president of Eurasia Group, said the Democratic National Convention in Chicago slated for August could be competitive following President Joe Biden’s…

Thought Leader: Ian Bremmer